Designing the future of translation

Watson Hello is a proof of concept project showcasing the capabilities of IBM Watson's natural language processing

Role:User Experience Designer

Date:2015

Skills:User experience, wireframing, user research, rapid prototyping, user testing, scenario mapping

Tools:Adobe Illustrator, paper prototyping, Final Cut Pro

Watson Hello was a 3-week IBM new hire challenge to train designers to collaborate in multidisciplinary teams and apply the principles of IBM Design Thinking. Our design team collaborated with the stakeholders from the Watson organization to design a translation app using Watson APIs.

I was put on a team of 7 people - one User Researcher, two Visual Designers, three User Experience Designers and one Front End Developer. Over the course of three weeks, we developed the proof of concept while learning the framework of IBM Design Thinking. As the User Experience Designer, I was responsible for concepting, wireframing, prototyping, and user testing. I presented these major deliverables to the Watson organization stakeholders.

The Challenge

The Watson stakeholders approached our team with two objectives — to improve the experience of using an app to communicate despite language barriers and to showcase the capabilities of Watson's natural language processing APIs. We were tasked to deliver high fidelity designs, prototypes and roadmaps for future experiences within 4 weeks.

Watson APIs offer a library of cognitive services available on IBM Bluemix to build applications that leverage the power of cognitive computing. The services our team used were Speech to Text, Text to Speech and Language Translator. The market for language translation is saturated and competitive. To make a meaningful product that impacts users, we needed to discover where the pain points and needs were and define the opportunity for the app.

The Discovery

We quickly needed to understand what assumptions and questions we had about the project's objectives, conduct competitive analysis to learn the industry landscape, and speak with users to gain insights on motivations, pain points and needs. We also partnered with cognitive computing engineers to understand how natural language processing works, the current capabilities of the Watson API's and future feasibility.

Due to the tight timeline, all team members contributed to recruiting interviewees and conducting user interviews. In order to understand user's real needs, we interviewed people who require translation services daily. In a week, we interviewed 7 users:

The Approach

From our interviews, we discovered how people use various tools and methods for working through the challenge of learning a new language.

Language training

Using existing technology

Dictionaries and other cultural books

Immersion through entertainment

We drew concepts from strategies that users had already developed, such as having a handy list of common phrases, calling a local friend, or practicing scenarios with a host family. These concrete behaviors and understanding of the user's environment lead us to our approach:

We sought to combine human strategies with the power of Watson's cognitive computing.

Categorizing the interviewees and potential users helped us measure impact vs base-size

We synthesized the findings of these interviews into three distinct personas: the Doctor, the Volunteer, and the Knowledge Worker. Each persona has distinct objectives and pain points that we aimed to solve. We tackled the project by designing for extreme examples in order to create a product that assists in diverse contexts involving language translation and also highlights the unique strength of Watson's natural language processing. We visualized the personas experience using journey maps, specifying specific touch-points and pain points where we should focus.

The Doctor

The Doctor needs to fully understand patient's symptoms and also communicate complex diagnoses and treatments. She relies on tools such as Google Translate, but is often frustrated that nuanced messages are not interpreted as intended. This miscommunication makes her feel helpless as she's failed her mission.

"I need to understand, before I can help."

"I want to help abroad but I don't, because I would feel ineffective."

The Volunteer

To prepare for traveling abroad, the Volunteer is trained in basic vocabulary and local customs, as well as given printed resources. However, actual collaboration with the local community reveals shortages in his training and being in the field leaves little time to reference his notes. The language barrier is an overwhelming obstacle in achieving goals together with the community.

"Language is all about listening."

"Communication is understanding, understanding is trust."

The Knowledge Worker

Working at a multinational company, the Knowledge Worker is excited to share her domain but quickly becomes frustrated and anxious as she struggles to keep up with her peers. As her team bounces back and forth ideas, the mental load of translating while speaking is overwhelming and she often finds that she can’t express the nuances in her ideas.

"Translating and thinking both take a lot of brainpower, and I can't do both at once."

"Missing one word can change the context of the entire conversation."

From the interviews and personas, we synthesized the key findings into three main insights of how our users approached translation. These insights became the design principles to guide our design solution to support our user's needs. We would frequently reference the design principles to align our team when it came to design decisions and priorities, driving the key concepts of the mobile app's experience.

These three concepts, paired with the technological capabilites of Watson are what will empower people to communicate in a different language, confidently and naturally.

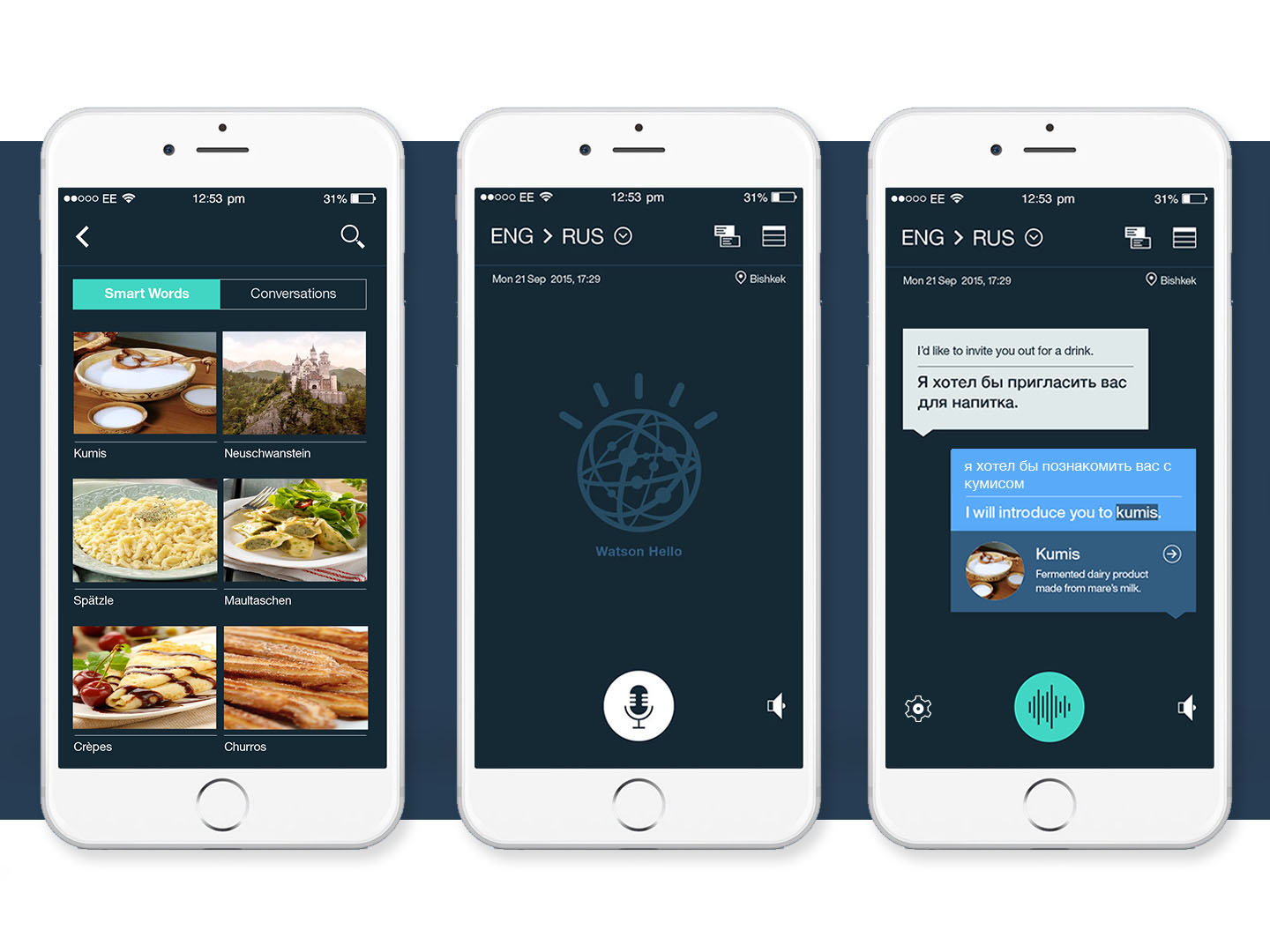

Introducing Watson Hello

Real time translation

By leveraging all three Watson APIs, we can create an interaction that minimizes the amount of time users spend inputting information. Instead, our app interprets and translates on the fly, ideally allowing the users to hold conversation barely breaking eye contact. This builds implicit trust, one of the key components of successful communication.

Various early wireframe iterations

Smart words

From our research, we recognized the importance of understanding context and culture as implicit parts of language. By using Watson's cognitive capabilities to identify proper nounds and words that have no English equivalent, we can surface relevant images, descriptions, links and addresses to users, supplying them with timely and useful cultural understanding at the most crucial point of communication.

High fidelity visual design iterations.

Various early wireframe iterations. Right design by Zach Tempkin.

Library

The Library is tied directly to insights from the research that preparation and review help to smooth future interactions and build trust. Users can review Smart Words or transcripts of conversations they have saved to find important information or practice the language.

The Library also acts as a place to illustrate common words and phrases with pictures in case pronunciation is an issue.

Text to Text translation

We included text to text translation for situations when speaking out loud with a device may not be appropriate. The user can instead type the material into the text field. Text to text translation utilizes the Watson language translation API.

Example scenario with an early wireframe iteration.

How we got there

Concepting

Prior to designing any interfaces, we collaborated together to brainstorm and prioritize concepts. Through various design thinking exercises, we produced user need statements, concept metaphors, feasability maps, and storyboards.

Wireframing

The User Experience Designers sketched out numerous iterations of the interfaces in varying fidelities. Early designs were tested with users through paper prototyping to rapidly evaluate our concepts. Once tried and tested, the designs were translated into mid fidelity wireframes in Adobe Illustrator and tested again with interactive prototypes.

Visual Designs

The Visual Designers collaborated with the User Experience Designers to translate the wireframes into hi-fidelity mockups. These hi-fidelity designs were also used to help share our story to the stakeholders.

Playbacks

We met with the Watson stakeholders weekly to share feedback, drive alignment on the design decisions, and discuss future strategy. This collaborative effort between design, engineering and business allowed us to remain transparent, identify risks early, and construct a collective vision.

Reflection

At the end of 3 weeks, we received positive feedback from our users and stakeholders. We packaged the research, design and technical specifications for handoff to the Watson stakeholders. Any designs or concepts out of the scope of the project were annotated.

If we could have continued further than the 3 weeks, there are several things I would have liked to investigate:

- Consider diving deeper into designing for industry use cases, such as harnessing Watson's mass medical or law knowledge

- Examine issues regarding availability of the Internet or prevalence of mobile devices in specific regions

- Scaling the project to wearables

- Work closely with the Watson development to track how data collected on the app could help train Watson

- Incorporate additional Watson APIs, such as visual recognition